During the years I have been in involved in broadcasting and web developement, I have done a lot of trial and error regarding encoding for the web. I have always used FFmpeg for the video part of the encoding, and recently improvements in FFmpeg has done the syntax much more straightforward, so let me show you how to make the best possible encoding when wanting to playback video on the web using HTML5/Flash:

First of all – find out what resolution you want to target. If you’re advanced, you will want to encode multiple resolutions so the server or the user can choose whichever resolution is best suited. However, in some cases it’s too complicated, so finding one target size that represents the best compromise, is often preferable.

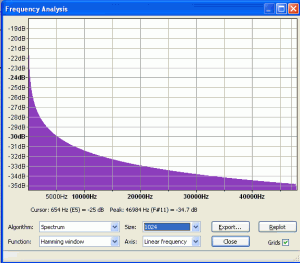

Finding the right resolution is a matter of finding the right balance between visual quality and performance – the more pixels, the harder it is for the computer to decode and display the signal. My choice is 768 x 432 pixels in 1 megabit (plus audio). It gives a descent image – not far away from DVD quality, and just about all computers will display it without stuttering and without dropping frames. We will encode the audio so well, that it will be hard to tell it apart from the original; which will actually make the viewer perceive the image quality as being better than it is (I guess this report shows my point).

1-pass or 2-pass encoding?

So, when encoding we’re left with two choices: 1-pass or 2-pass encoding. 2-pass encoding is the obvious choice if you plan to stream your signal using a stream server like: Adobe FMS or Wowza using a protocol like RTMP. A 2 pass encoding ensures, that the stream has a fixed bitrate – but without the artifacts and drawbacks known from CBR (Constant Bitrate). This is only relevant when using a real stream server, since it makes it easier to do load balancing cause you always know how many streams each server can handle – which is usually limited to the network card in the server.

If you just plan to stream using a regular webserver – also known as progressive download, then you’re better of using 1-pass encoding since 1-pass is faster to encode (like 40% faster) and gives you a better visual quality in the same amount of data.

Let’s transcode!Now it’s time for the actual transcoding using FFmpeg. What I do is as follows:

#1 – decode the audio of the input video file to wav (uncompressed).

#2 – encode the wav file to AAC using Nero AAC.

#3 – encode the video using FFmpeg

#4 – mux (combine) the video and audio together using MP4Box.

Update! Since FFmpeg now offers a good AAC encoder, it’s no longer necessary to use Nero’s AAC encoder. Thus you can skip step 1, 2 and 4 and go straight to step 3. All you have to do is to skip the -an parameter. Nero would however still be my choice.

I use Windows and the following works great:

Video encoding (size 768×432 pixels, 1 megabit. -tune film is the default, use -tune animation for non-film inputs):

Video encoding using 2-pass:

Pass 1:

ffmpeg -i “input.mov” -vf scale=768:432 -pass 1 -sws_flags lanczos -vcodec libx264 -preset slow -tune film -y -an -b:v 1000k -bufsize 2000k -f rawvideo NUL

ffmpeg -i “input.mov” -vf scale=768:432 -pass 2 -sws_flags lanczos -vcodec libx264 -preset slow -tune film -y -an -b:v 1000k -bufsize 2000k -f mp4 temp.mp4

Video encoding using 1-pass (the rest of the steps are the same for both 1-pass and 2-pass encoding):

ffmpeg -i “input.mov” -vf scale=768:432 -vcodec libx264 -preset slow -tune film -y -an -crf 22 temp.mp4

Audio encoding:

ffmpeg -i “input.mov” -y -ac 2 -f wav temp.wav

neroAacEnc -q 0.35 -if temp.wav -of temp.m4a

Muxing together:

mp4box -add temp.m4a#audio “out.mp4″

mp4box -add temp.mp4#video “out.mp4″

Voila! You have the best H.264 encoding in town!

Notice that I use “-f rawvideo NUL” for my first pass. This tells FFmpeg not to output an output file since all we want to do is to build a stat file for the second pass. This speeds up the first pass a bit. Also notice the -an parameters which tells FFmpeg not to encode audio since we do that with Nero instead – again a minor performance gain.

A great bonus of using MP4Box is that it places the moov atom in the beginning of the file. This causes the file to play immediately when served using progressive download. FFmpeg on the other hand, places the moov atom in the end of the file – hence you have to download the whole file before being able to start it, because only the moov atom can tell the player how to interprete the H.264 file. If you want to know the deeper explanation behind this, you can get it here and here.

(Update august 2013: FFmpeg now has some support for faststart)

When doing the single pass video encoding, we use the CRF parameter instead of a fixed data rate. CRF means constant quality mode also known as constant ratefactor and denotes the quality of the encoding. You assign the CRF parameter a number between 15 (best) and 31 (worst) – and use decimals if you like. I often use 22, which gives a fairly small file size while maintaining a great visual quality.

Why not let FFmpeg encode to AAC? Well, in short, because FFmpeg is lacking a good AAC encoder – but more on this issue in a later blog post. Update! This has now changed and the built in AAC encoder is comparable with the Nero AAC encoder.